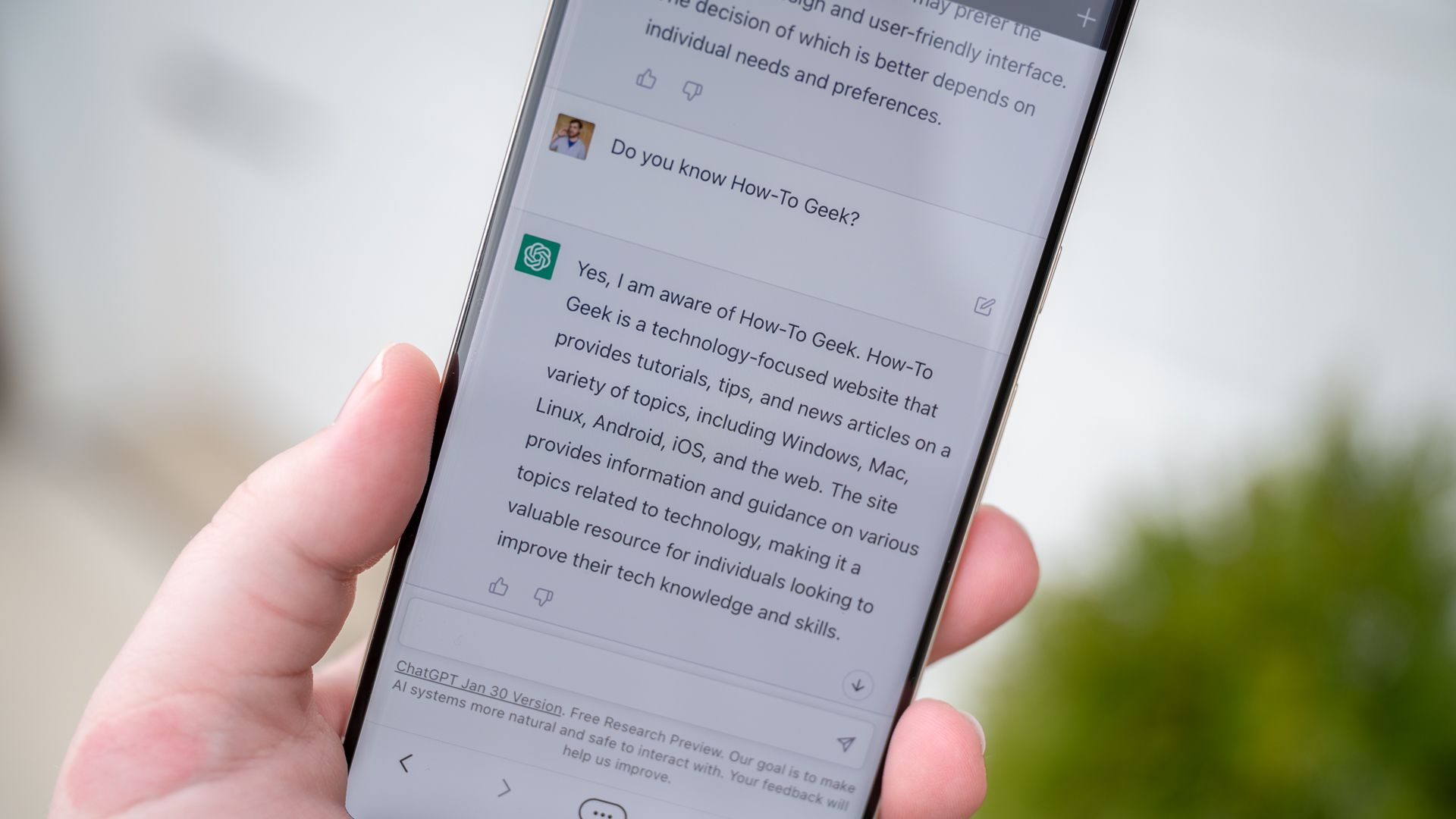

ChatGPTis incredibly powerful and has had a transformative effect on how we interact with computers.

However, like any tool, it’s important to understand its limitations and to use it responsibly.

Here are five things you shouldn’t use ChatGPT for.

While ChatGPT often generates impressively coherent and relevant responses, it’s not infallible.

It can produce incorrect or nonsensical answers.

Its proficiency largely depends on the quality and clarity of the prompt it’s given.

Related:8 Surprising Things you’re able to Do With ChatGPT

1.

This includes financial details, passwords, personal identification information, or confidential data.

Its responses are based on patterns and information available in the data it was trained on.

It can’t understand the nuances and specifics of individual legal or medical cases.

It is not the general-purpose ChatGPT product available to the public.

But, it’s essential to remember that the AI doesn’t understand the real-world implications of its output.

Therefore, whileit can be a useful toolfor brainstorming or exploring ideas, humans should always make final decisions.

GPT 3.5 has a significantly worse reasoning ability thanGPT 4!

Related:GPT 3.5 vs. GPT 4: What’s the Difference?

It can’t verify information or check facts in real-time.

ChatGPT is prone to “hallucinating” factsthat sound true, but are completely made up.

They cannot understand and process human emotions deeply.

AI cannot replace the nuanced understanding, emotional resonance, and ethical guidelines inherent to human therapists.

For any serious emotional or psychological issues, always seek help from a licensed mental health professional.

Don’t Use ChatGPT For Math!

However, it’s essential to note that ChatGPT’s forte is language,not mathematics.

Related:How to Create ChatGPT Personas for Every Occasion