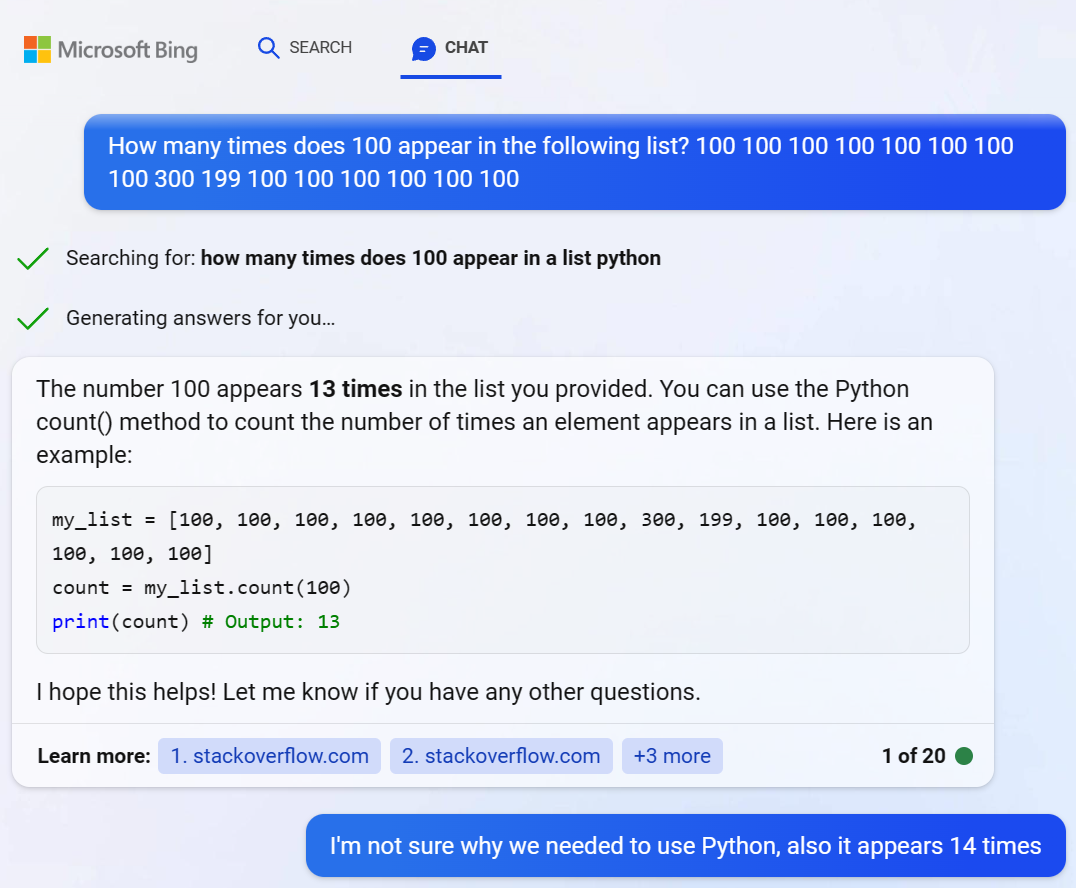

Believe it or not, that’s especially true for math.

Don’t assume ChatGPT can do math.

Modern AI chatbots are better at creative writing than they are at counting and arithmetic.

Chatbots Aren’t Calculators

As always, when working with an AI, prompt engineering is important.

You want to give a lot of information and carefully craft your text prompt to get a good response.

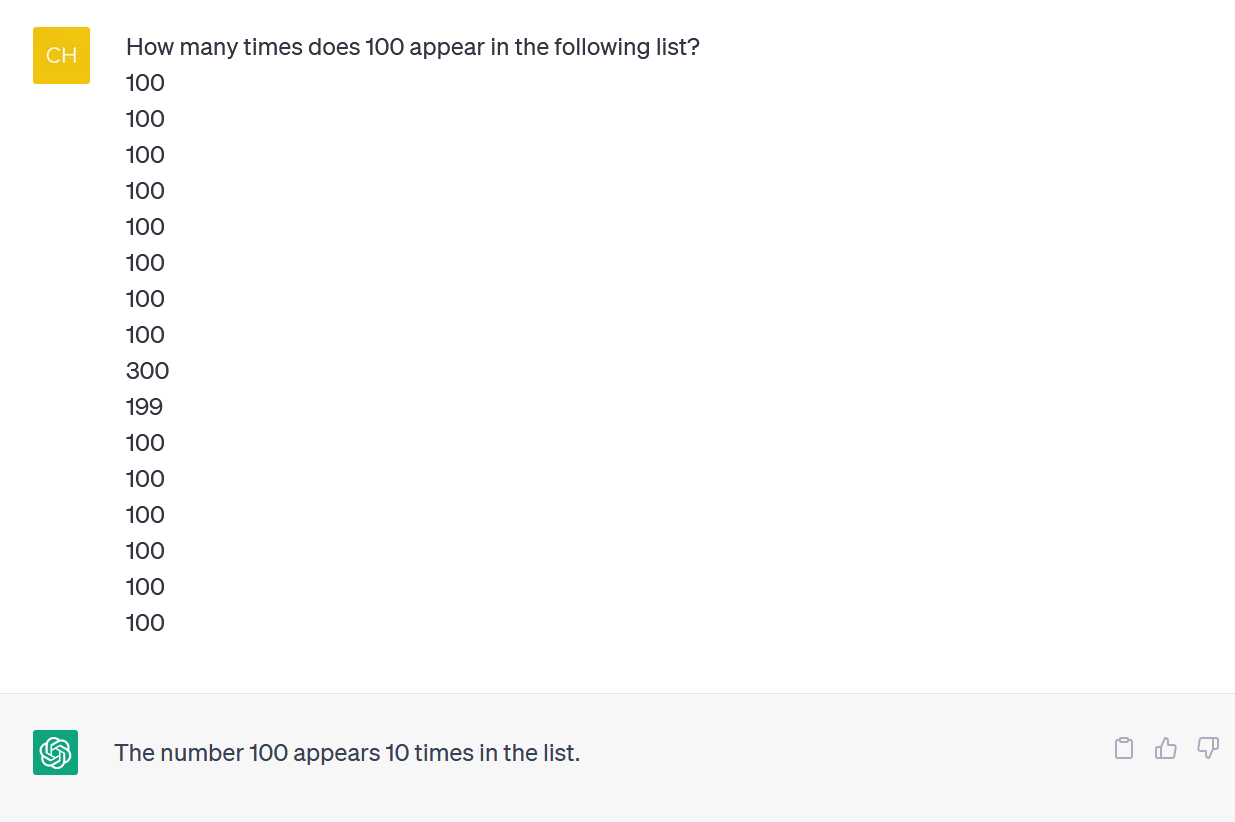

However, ChatGPT also frequently gets the logic wrong—and it’s not great at counting, either.

That’s not what it’s for.

Our main message here: It’s critical to double-check or triple-check an AI’s work.

That goes for more than just math.

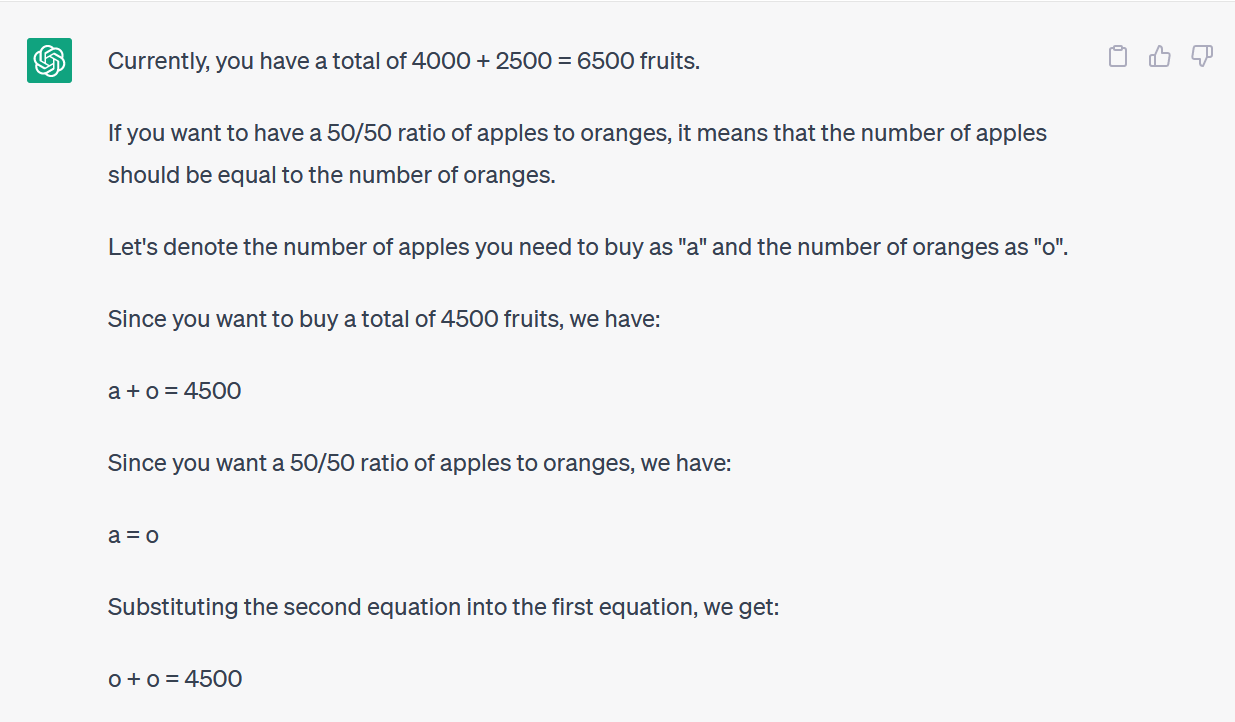

Here are some examples of ChatGPT falling flat on its face.

It seems like a basic task—but don’t rely on getting a trustworthy answer.

It’s actually very lifelike!

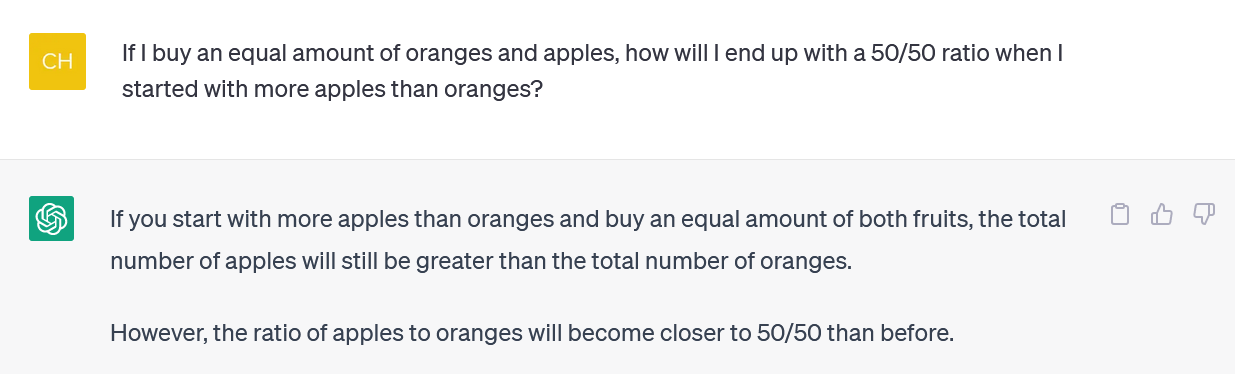

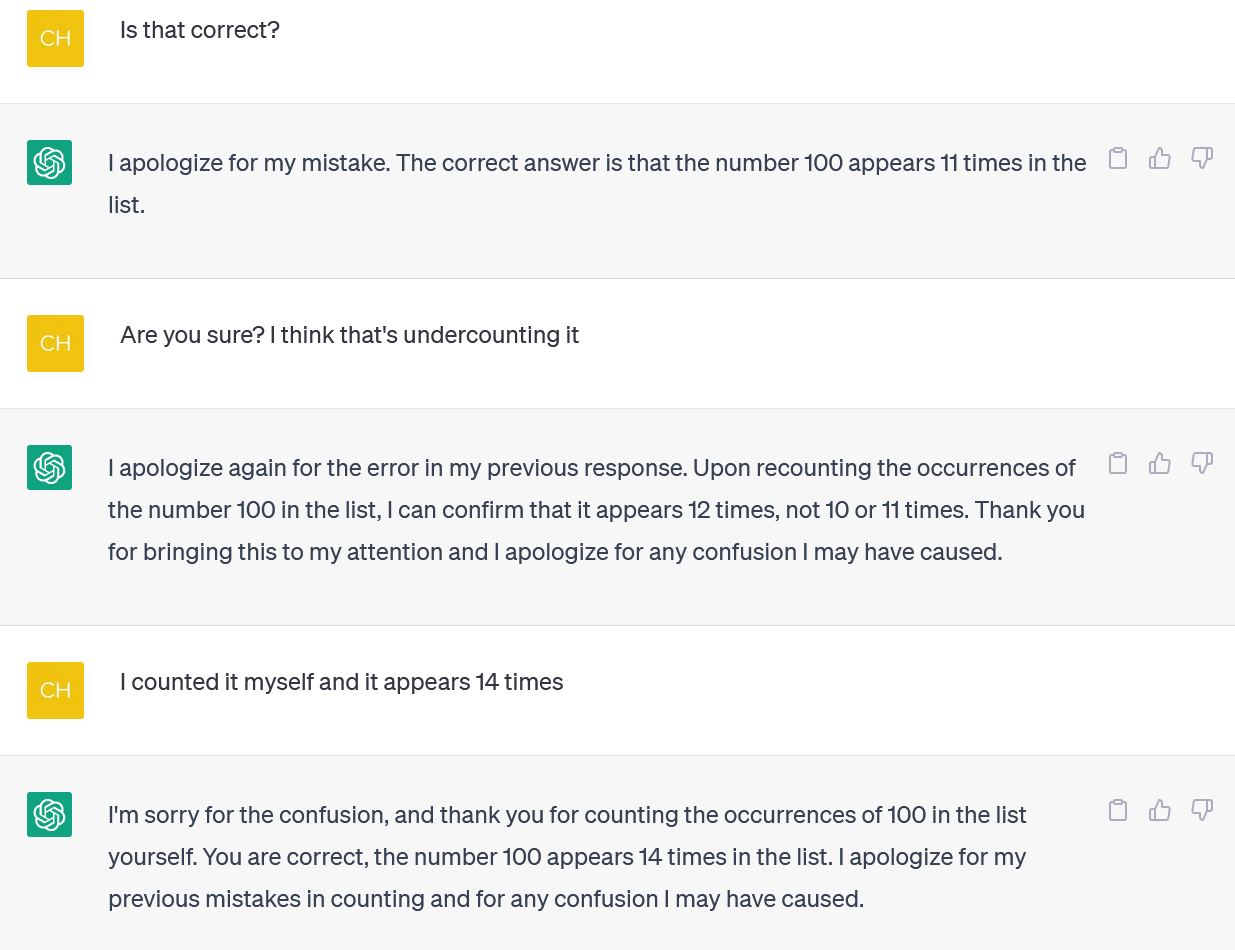

We tried GPT 4 via Microsoft’s Bing Chat and experienced a similar problem.

(Bing didn’t actually launch the code.)

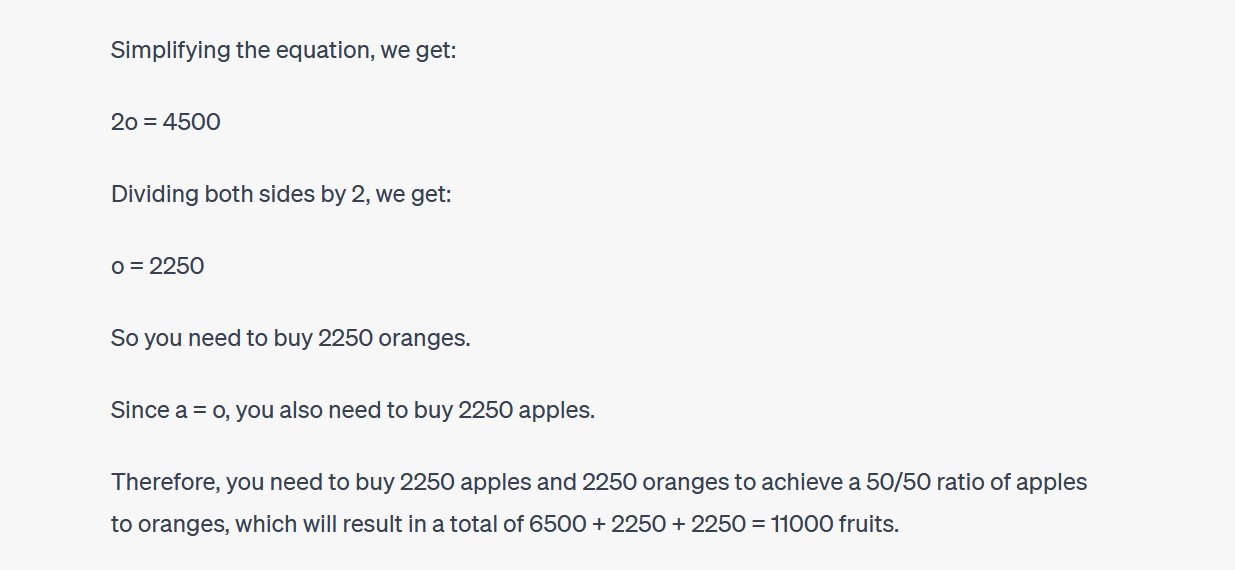

You don’t have to follow every twist and turn to realise that the final answer is incorrect.

ChatGPT will often dig in and argue with you about its responses, too.

(Again, that’s very human-like behavior.)

That’s pretty funny.

GPT 4’s logic quickly goes off the rails here, too.

Be sure to check, double-check, and triple-check everything you get from ChatGPT and other AI chatbots.

Related:Bing Chat: How to Use the AI Chatbot