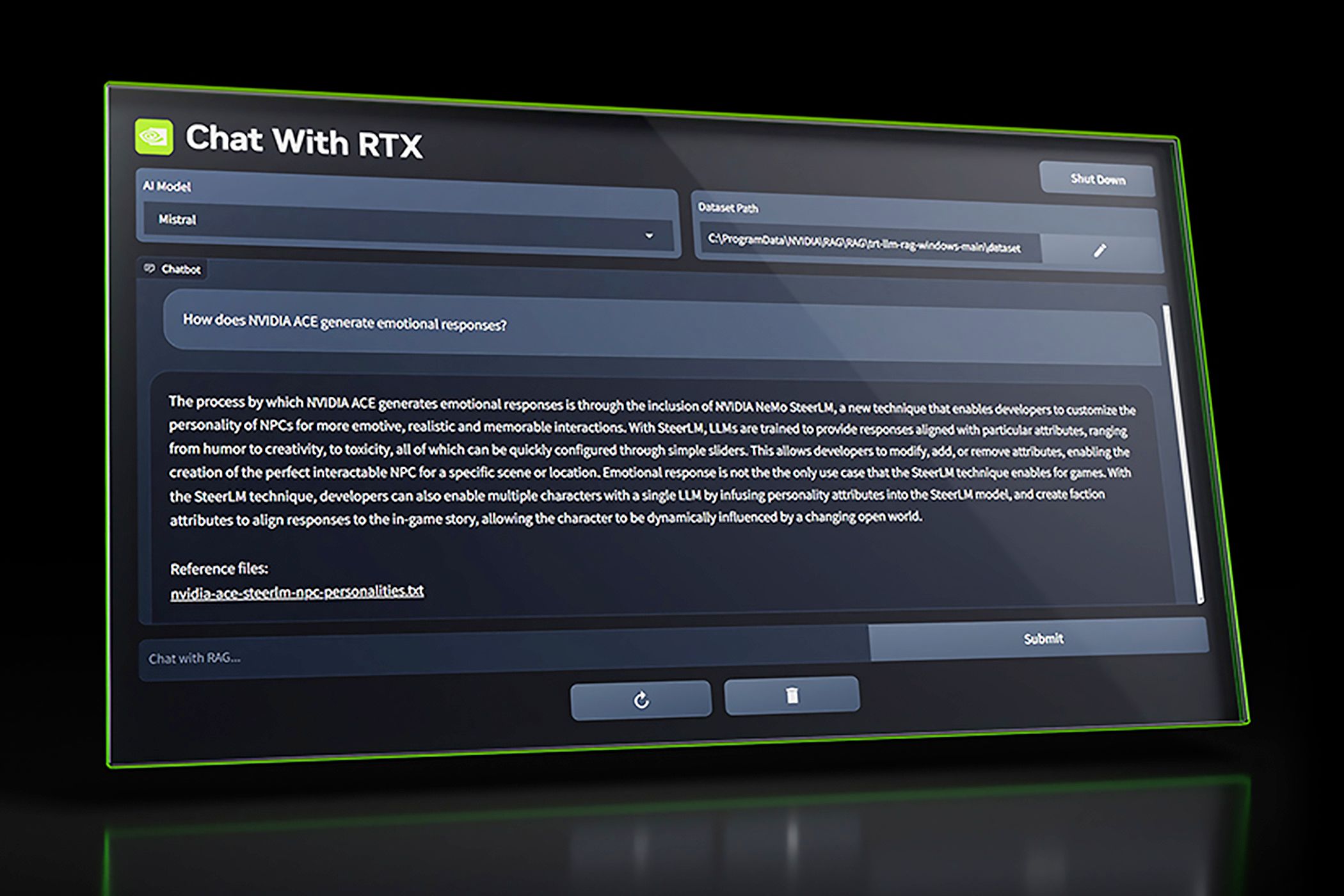

At its core, Chat With RTX is a personal assistant that digs through your documents and notes.

The personalized LLM can also pull transcripts from YouTube videos.

This works with standalone YouTube videosandplaylists.

NVIDIA

Because Chat With RTX runs locally, it produces fast results without sending your personal data into the cloud.

The LLM will only scan files or folders that are selected by the user.

I should note that other LLMs, including those from HuggingFace and OpenAI,canrun locally.

We went hands-on with Chat With RTX at CES 2024.

It’s certainly a “tech demo,” and it’s something that must be used with intention.

But it’s impressive nonetheless.

I’m excited to see how people will experiment with this program.

You caninstall Chat With RTXfrom the NVIDIA website.

Note that this app requires an RTX 30-series or 40-series GPU with at least 8GB of VRAM.

NVIDIA also offers aTensorRT-LLM RAGopen-source reference project for those who want to build apps similar to Chat with RTX.