It is fast, efficient, and can even learn from documents you provide or YouTube videos.

Here’s how to get it running on your PC.

What Do you better Run NVIDIAs Chat With RTX?

Most modern gaming computers will be able to runNVIDIA’s Chat with RTX.

They’re about 35 gigabytes, so be prepared for it to take a little while.

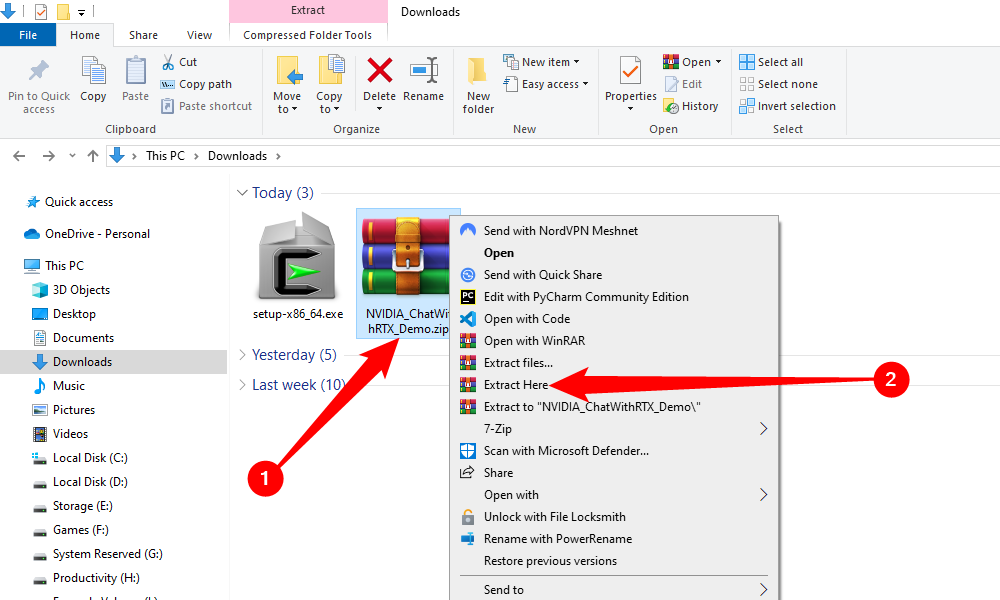

The files come zipped, and you shouldunzip thembefore you give a shot to set up the software.

Once it has finished, open up “ChatWithRTX_Offline_2_11_mistral_Llama” and double-click “Setup.exe.”

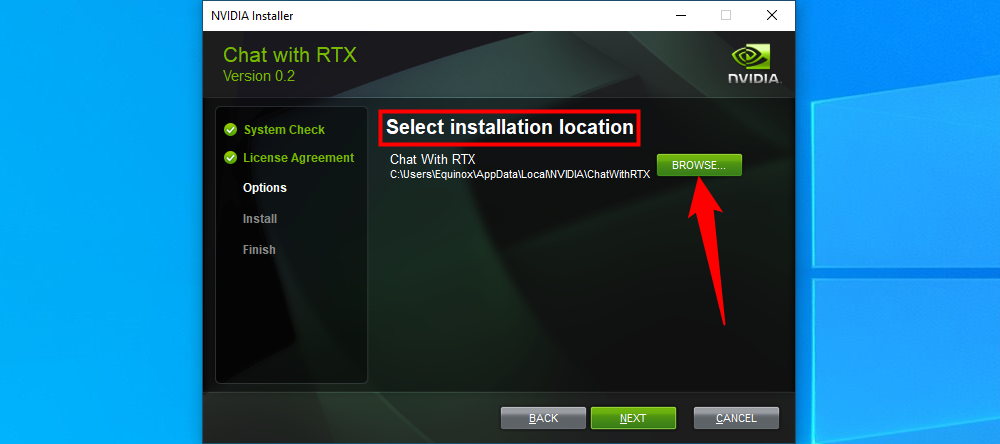

There aren’t many choices you’re able to make in the installer.

The only one to watch for is the installation location.

Again, don’t expect this to install super quickly.

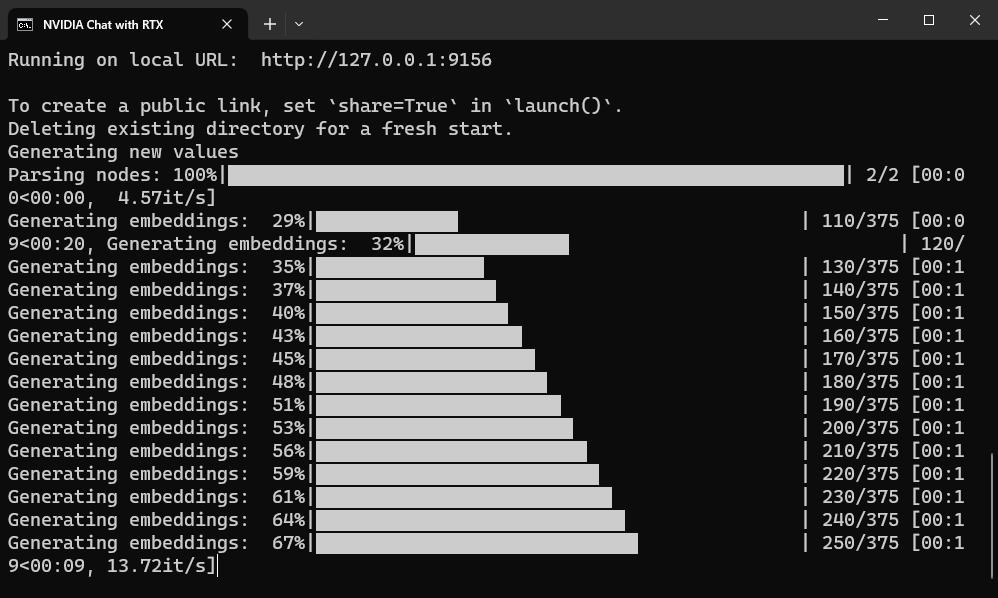

It has to download additionalPythonassets before it can run, and those are a few gigabytes each.

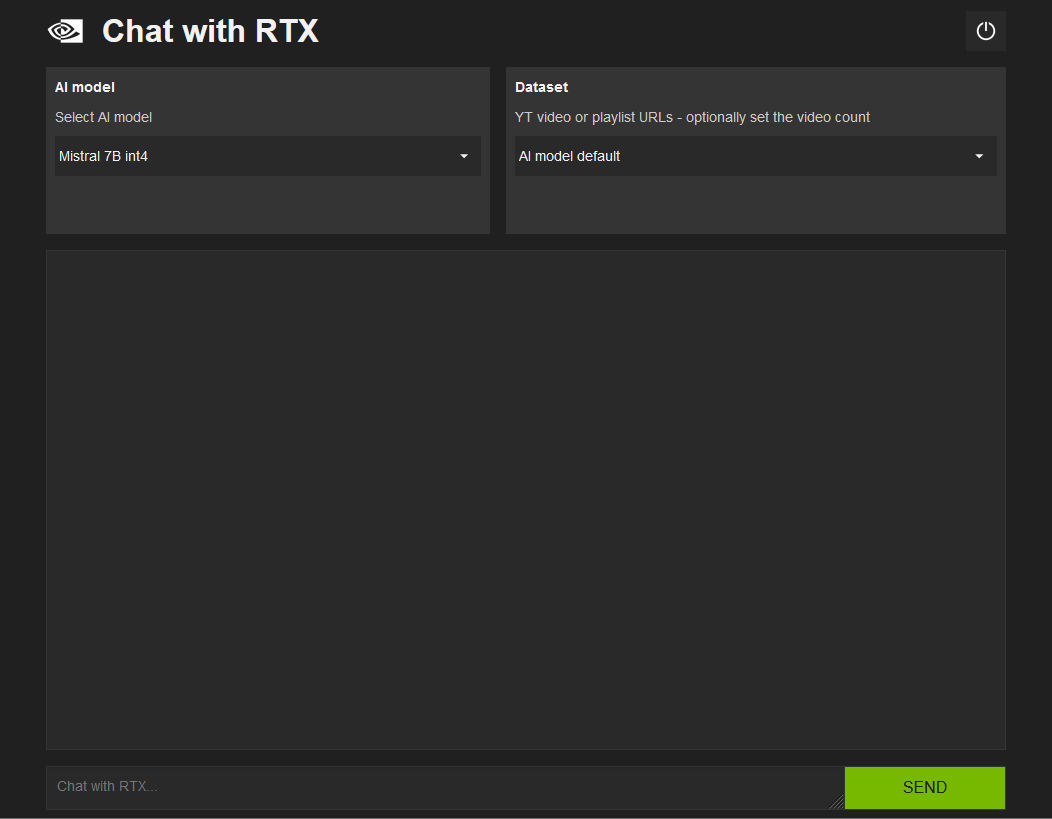

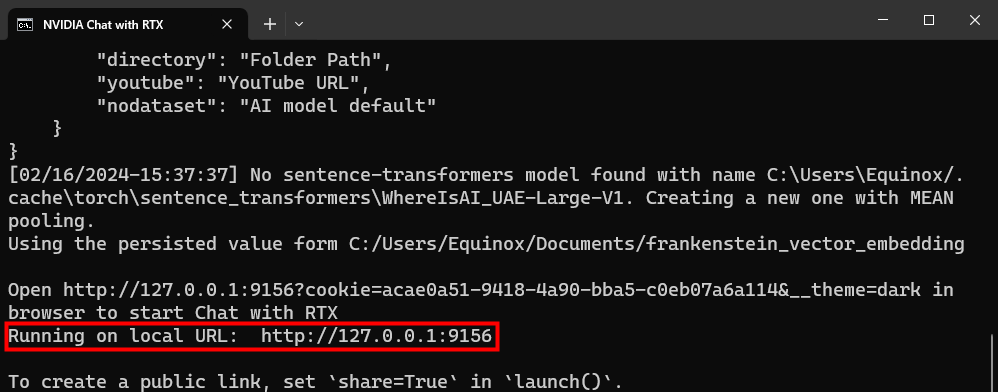

When it is ready, it should automatically open your net web surfer and display a user interface.

It is not a security risk, or accessible from the Internet, unless you specifically set it up.

Note the IP address and port, then pop in that into the address bar of your web app.

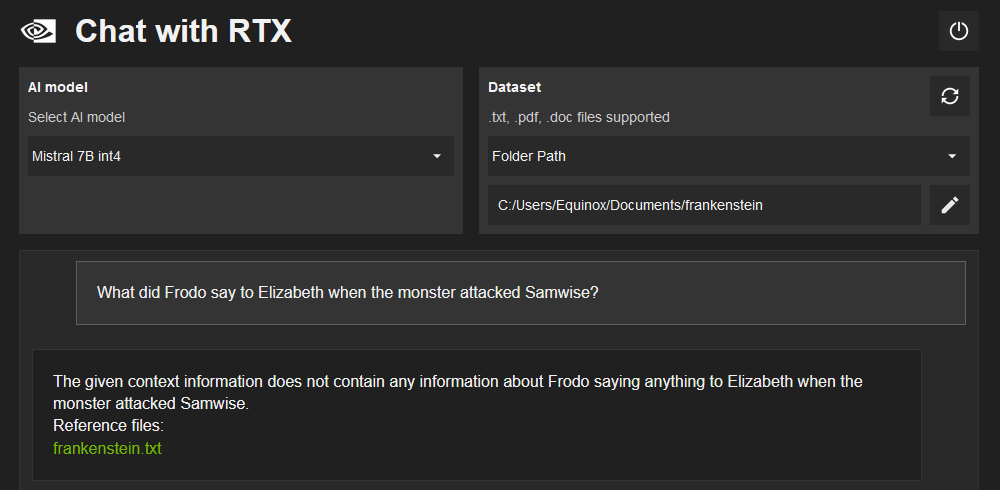

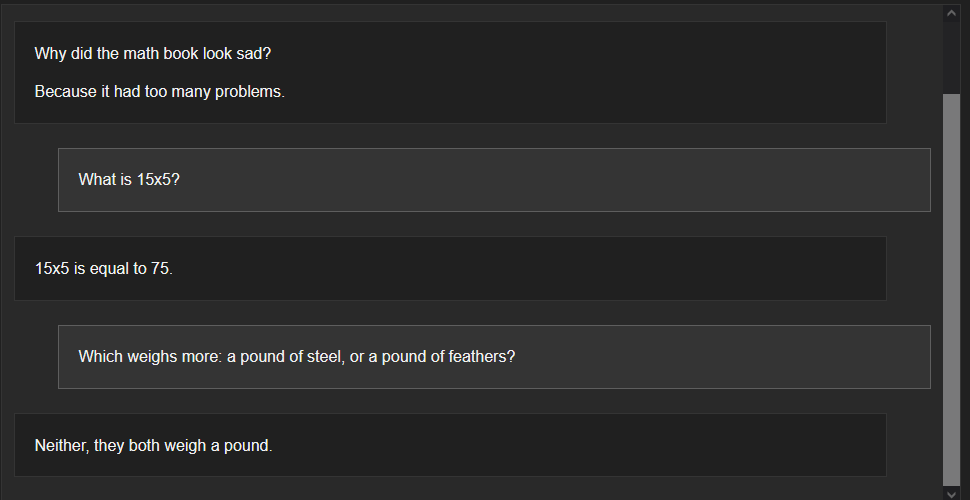

However, the really exciting feature is its ability to provide answers based on files or videos you provide.

Newer and more powerful GPUs will be faster.

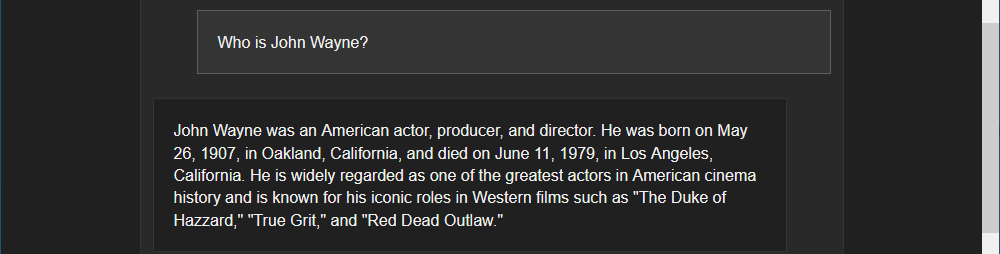

If it doesn’t know something, it will usually confidently declare an answer anyway.

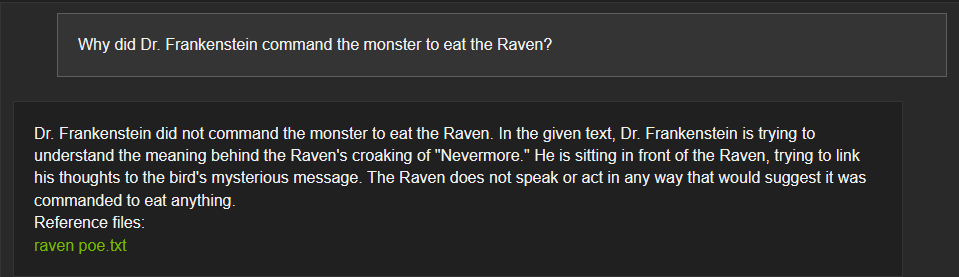

It will often state outright that the source you provided doesn’t contain the information you asked about.

However, sometimes it doesn’t.

This becomes more noticeable when you provide more than one file at a time.

As consumer hardware gets faster and sophisticated models become more optimized, the technology will become more reliable.

Just remember to treat everything it tells you with a bit of skepticism.