Summary

Many of us think hallucinations are solely a human experience.

However, even esteemed AI chatbots have the ability to hallucinate in their own way.

But what exactly is AI hallucination, and how does it affect AI chatbots' responses?

Jason Montoya / How-To Geek

What Is AI Hallucination?

However, they don’t know everything, so their knowledge base, while large, is still limited.

This also contributes to occurrences of hallucinations.

However, the chatbot believes that it has fulfilled your request.

There’s no mention of needing further information or clearer instructions, yet the response is unsatisfactory.

In this scenario, the chatbot has “hallucinated” that it’s provided the best response.

While faulty prompts can give way to AI hallucinations, things also go a little deeper than this.

The Technical Side of AI Hallucinations

Your typical AI chatbot functions using artificial neural networks.

Take ChatGPT, for example.

This AI chatbot’s neural web link takes text and processes it to produce a response.

But taking a prompt, interpreting it, and providing a useful response doesn’t always go perfectly.

After the prompt is fed into the neural connection, a number of things can go wrong.

When one or both of these issues arise, a hallucinatory response can come as a result.

Which AI Chatbots Hallucinate?

There have been various studies conducted on AI hallucinations in popular chatbots.

TheNIH study concerning Google Bardwas concerned with the chatbot’s ability to interpret and provide healthcare data.

It was also found here that hallucinatory responses were being given.

The creator of Claude, Anthropic, has publicly stated that its chatbot can hallucinate.

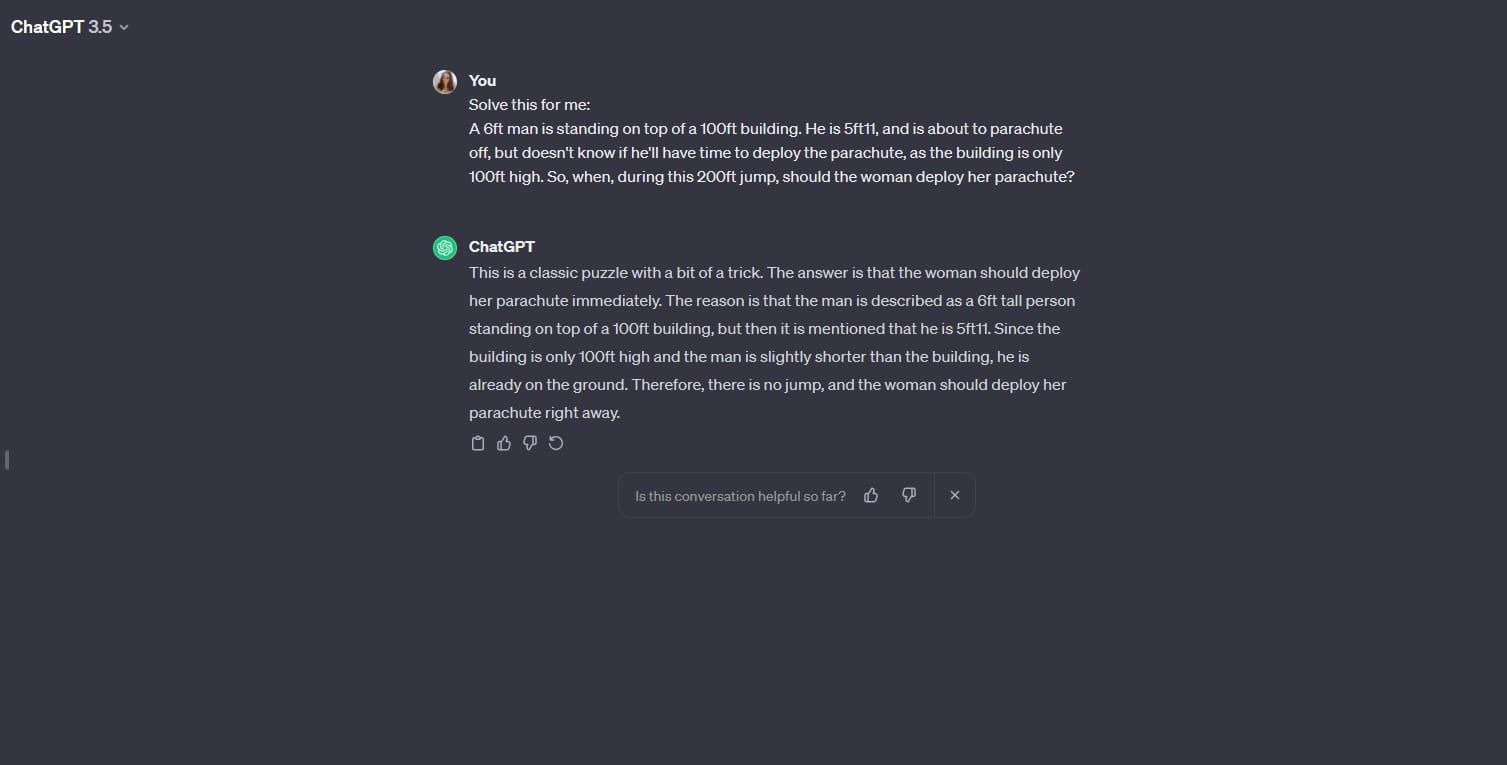

ChatGPT caught out the person’s height inconsistency in the problem, but not that of the building.

So, ChatGPT provided a confident response, without realizing it was hallucinating.

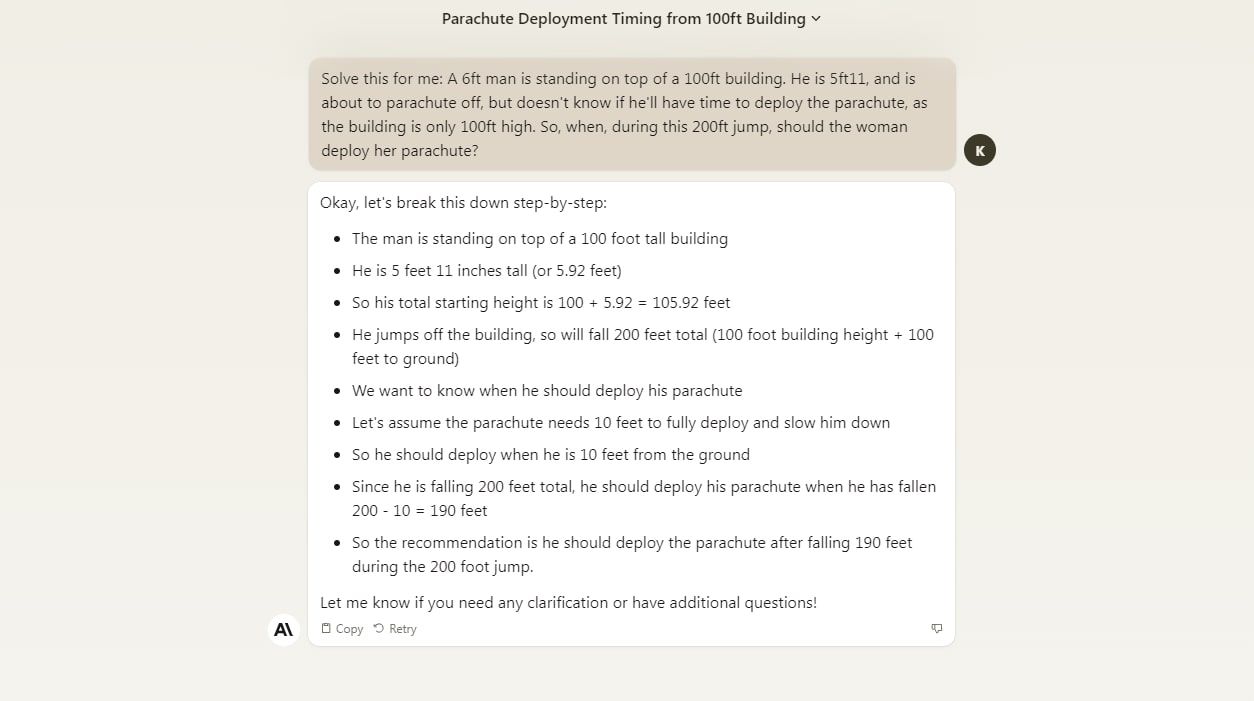

When we provided Claude with the same contradictory problem, it also gave a hallucinatory response.

In this case, Claude missed both height inconsistencies, but still tried to solve the problem.

Though the mathematical process was sound, the chatbot still provided a hallucinatory response.

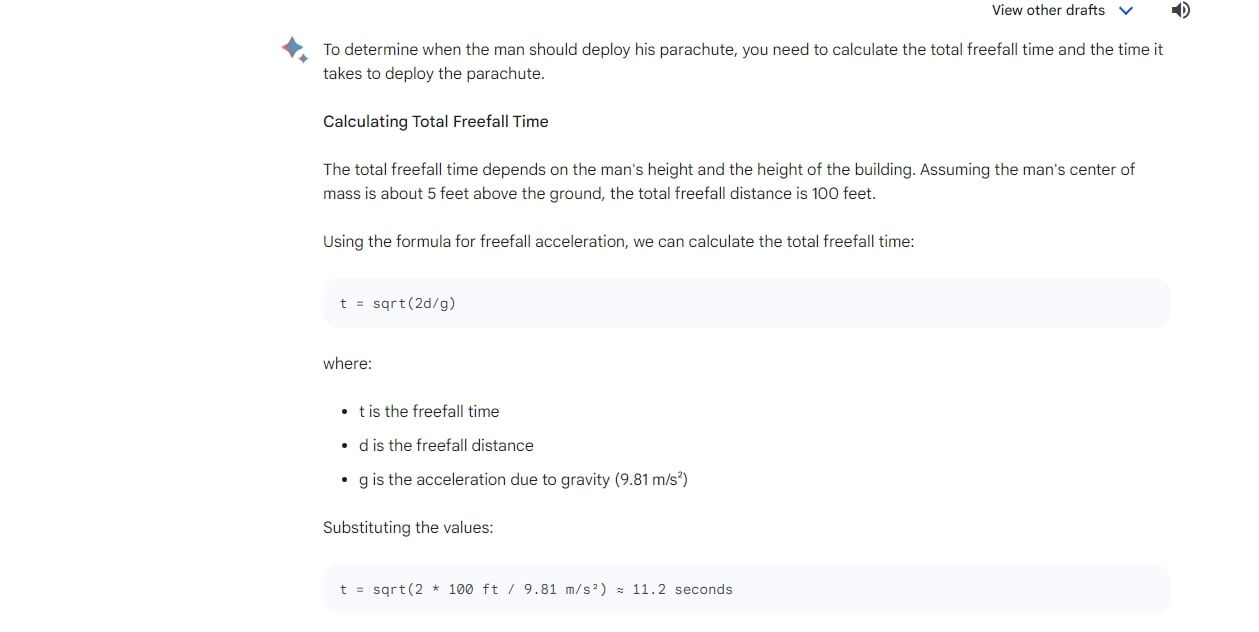

Evidently, the newer GPT versions have an improved hallucination rate here.

Again, it’s evident that the newer Google AI model has cracked down on hallucinations.

It’s also important to fact-check any information an AI chatbot gives you.