So, what is an LLM, and how does it allow AI to hold conversations with humans?

What Is an LLM?

The term “LLM” is short for Large Language Model.

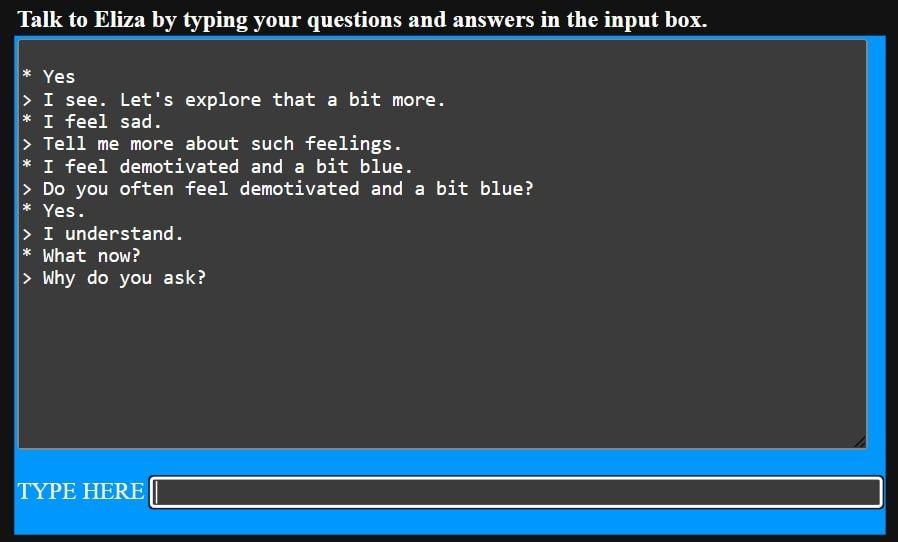

New Jersey Institute of Technology/Joseph Weizenbaum

The concept of conversational computers was around long before the first example of this technology was put into practice.

Back in the 1930s, the idea of a conversational computer arose, but remained entirely theoretical.

Decades later, in 1967, the world’s first chatbot, ELIZA, was created.

Katie Rees

The New Jersey Institute of Technology stillprovides a web-based version of ELIZAthat can be interacted with today.

As you could see, ELIZA isn’t great at interpreting language and providing useful information.

While ELIZA was an innovative invention, it wasn’t an LLM.

In fact, it took another 47 years for a technology close to today’s LLMs to be achieved.

In 2013, an algorithm known asword2vecbecame the LLM’s most recent ancestor.

This ability to create association between words was a huge step towards modern LLMs.

Prior to BERT’s release, several Google researchers wrote and published a paper titledAttention Is All You Need.

This paper set the stage for transformers, as it introduced this technology to the world.

We’ll get a little more into how transformers work later on.

So, what’s behind this impressive technology?

How Do LLMs Work?

A crucial element that a lot of popular LLMs need is pre-training.

Take OpenAI’s GPT-3.5, for example.

GPT-3.5’s training also involved two other aspects, known as “reinforcement” and “next-word prediction.”

However, other kinds of LLMs go through a different preliminary process, such as multimodal and fine-tuning.

one form of input media is converted to another form of output media).

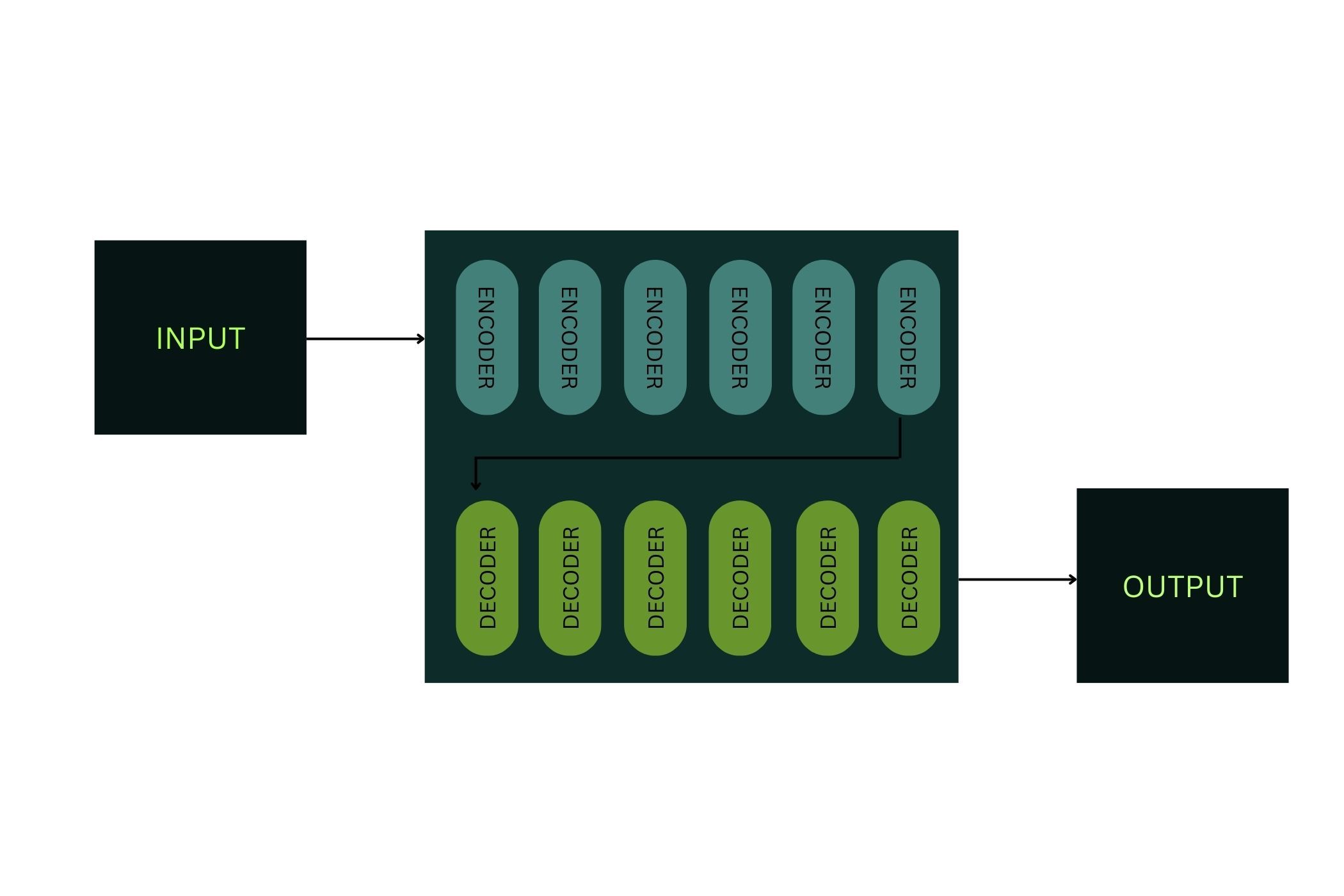

First, text-based data reaches the encoder, and is then converted to numbers.

This process is also known as transformer self-attention, or multi-head attention.

Self-attention involves the transformer performing multiple calculations at once while comparing various input tokens to each other.

From this, an output attention score is calculated for each token, or word.

Now, it’s time to decode the numerical data into a text-based response.

Where Are LLMs Used?

These nifty tools began rising in popularity in late 2022, after OpenAI released GPT-3.5.

Since then, the app scope of LLMs has widened vastly, but AI chatbots remain very popular.

This is because AI chatbots can offer users a myriad of services.

But things don’t stop with AI chatbots.

Some very well-known LLMs are used outside of chatbot environments.