As existing models expand to accept more input modalities, AI tools are only going to get more advanced.

What Does “Multimodal” Mean?

Multimodal AI is capable of accepting two or more input methods.

Justin Duino / How-To Geek

This applies both when training the model and when interacting with the model.

These modes can be prioritized within the model, weighting the results based on the intended result.

Multimodal models are an evolution of the unimodal models that saw an explosion in popularity during 2023.

Unimodal models are only capable of taking a prompt from a single input (like text).

How Is Multimodal AI Better Than Regular AI?

Multimodal AI is the logical evolution of current AI models that allows for more knowledgeable models.

Lets say you wanted to create a new image based on a photo you had taken.

You could feed the photo to an AI and describe the changes you wanted to see.

These types of models would have better results even if youre only interacting with them over text.

Of course, you should alwaysmaintain a healthy level of skepticism when conversing with a chatbot.

Multimodal AI is gradually making its way into everyday technology.

All of this could be useful to an assistant in the right context.

The implications for industry are vast.

Is a component getting hot?

Does the component look worn?

Is it louder than it should be?

Some Examples of Multimodal AI

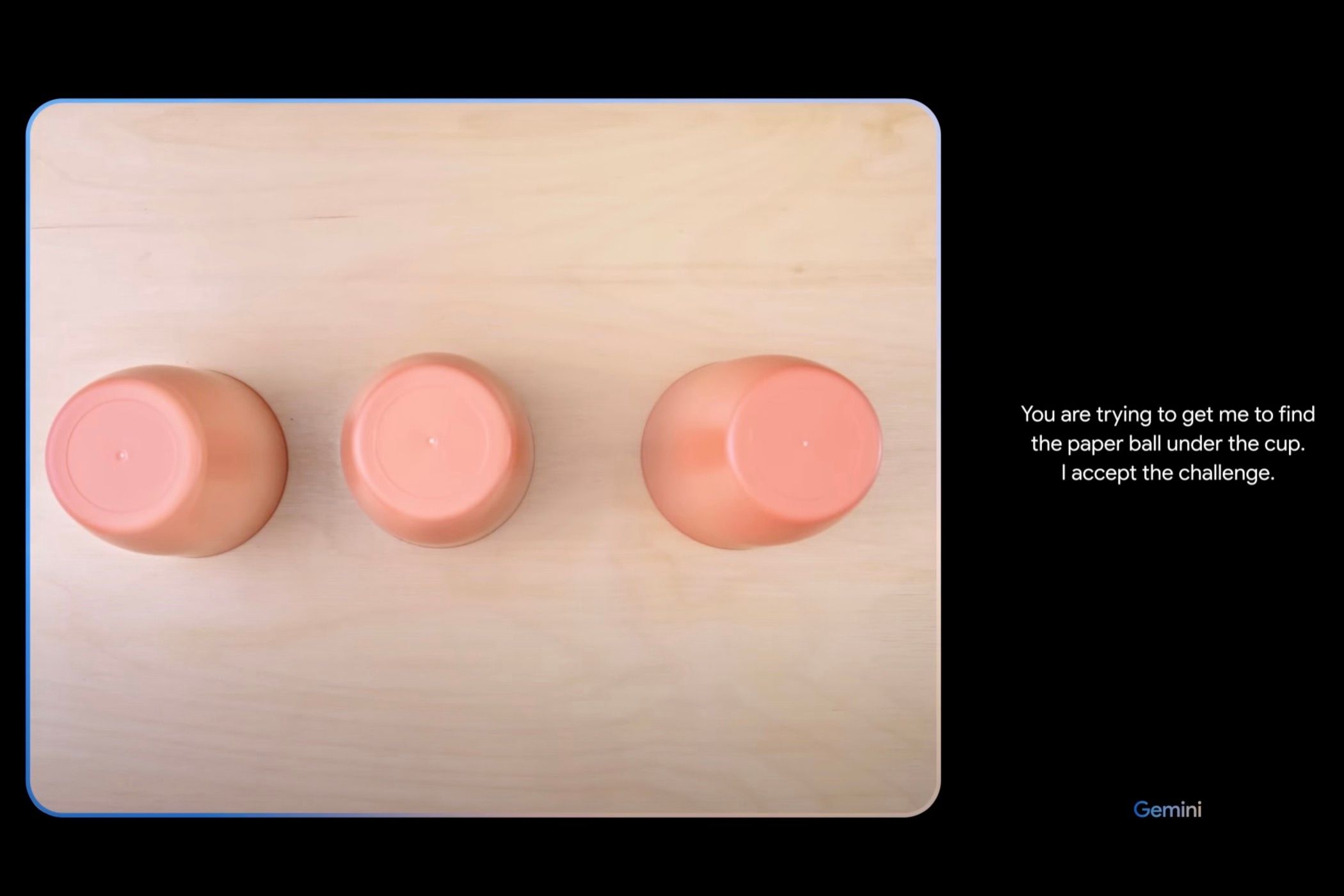

Google Geminiis perhaps one of the best-known examples of multimodal AI.

The model hasnt been without controversy, witha video demonstrating the model releasedin late 2023 branded fake by detractors.

Developers can already get started using Gemini today simply byapplying for an API keyin Google AI Studio.

It goes head to head with OpenAIsGPT-4, which can accept prompts of both text and images.

Just choose the image icon in the Ask me anything… box to attach an image to your query.